Introduction: The Ghosts of Technology Past

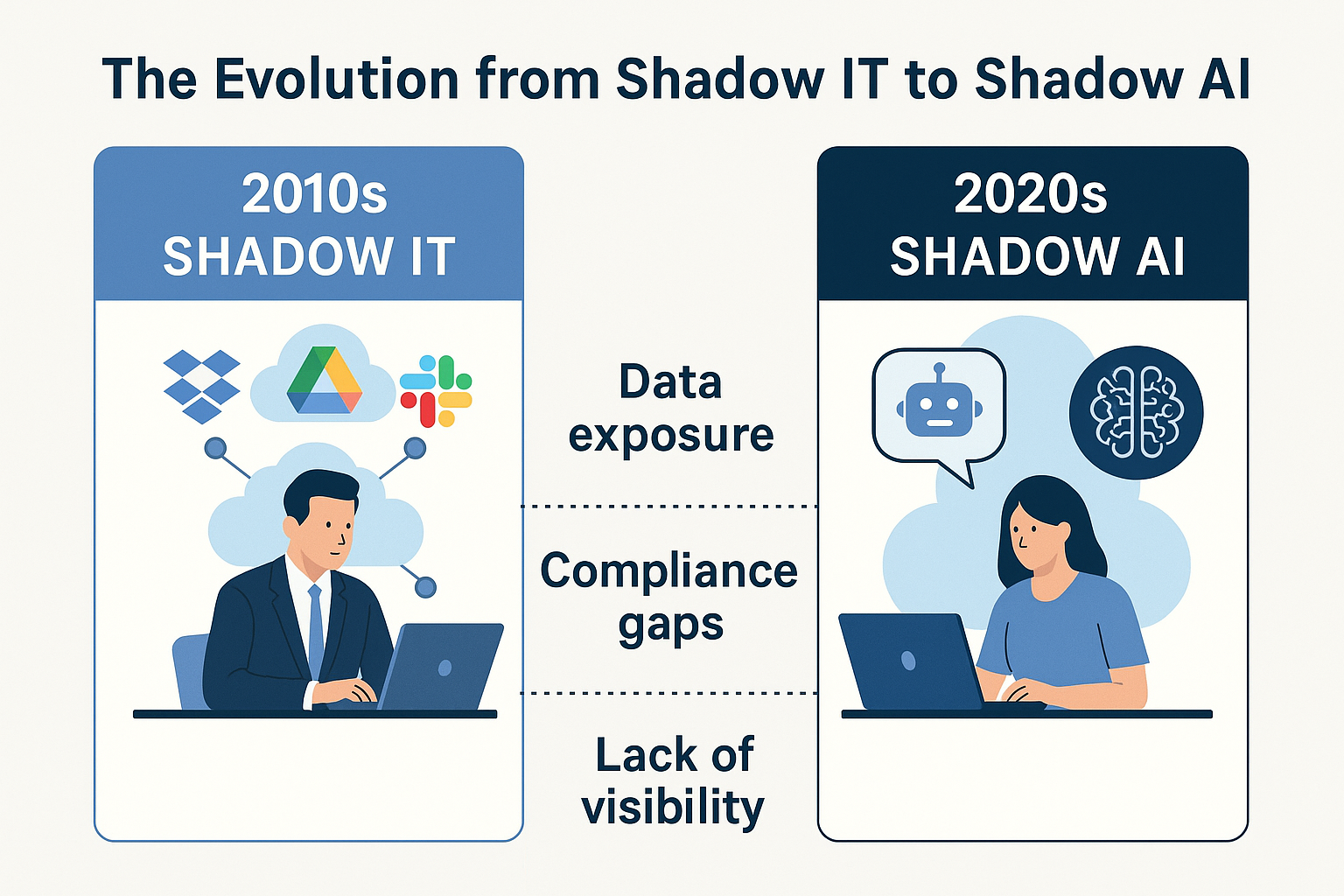

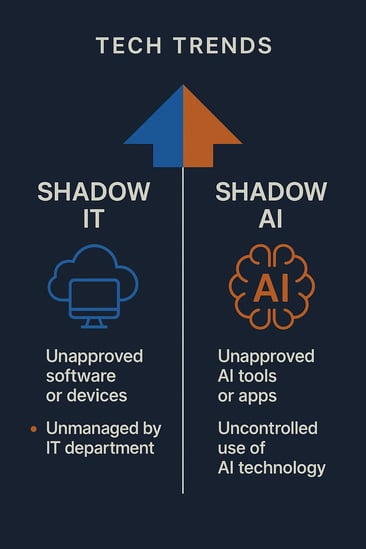

In the early 2010s, enterprises faced a growing, yet invisible, enemy—Shadow IT. Employees were using unsanctioned cloud services, such as Dropbox, Google Drive, and Slack, to complete tasks more efficiently, thereby bypassing corporate security and IT controls. Though often well-intentioned, these habits introduced enormous risks, from data leakage to compliance violations.

Fast forward to 2025, and history is repeating itself. Today’s challenge is Shadow AI—employees and teams using generative AI tools without organizational approval or oversight. Whether through ChatGPT, Bard, or Mid-Journey, AI’s rapid adoption has outpaced corporate governance. Once again, innovation is outpacing policy.

Enterprises that once battled the explosion of unsanctioned SaaS now face a new wave of invisible risk—this time powered by algorithms and large language models, rather than storage and collaboration apps.

So what can we learn from the past? And how can we stop Shadow AI from becoming the next decade’s major compliance scandal?

Lessons from Shadow IT

Before we can understand how to control Shadow AI, it’s worth remembering how Shadow IT emerged—and how organizations learned to tame it.

The Rise of SaaS Without Sanction

At the height of cloud transformation, business units rapidly adopted SaaS tools to drive efficiency and productivity. Marketing teams collaborated in real time on platforms like Trello and Google Docs. HR leveraged web-based survey systems to streamline employee engagement. Finance, seeking convenience, sometimes stored highly sensitive spreadsheets in personal Dropbox accounts—all often outside the visibility and control of the IT department.

What started as resourceful “rogue innovation” felt innocuous, promising faster workflows and empowered teams. However, IT leaders quickly recognized a new layer of risk. Every unapproved SaaS application—no matter how well-intentioned—created additional gateways into the enterprise. Sensitive business and customer data began flowing beyond corporate firewalls into third-party or even consumer-grade environments, many of which lacked the enterprise-grade encryption and robust security controls necessary to protect this data.

As a result, security teams faced an urgent set of challenges:

- Cloud adoption without oversight: Employees gravitated toward SaaS tools that made their jobs easier, unaware—or unconcerned—about the organization’s established risk tolerance and security policies. This decentralized adoption left IT out of the loop and unable to enforce controls.

- Sensitive data exposure: Proprietary information and regulated data found their way into unsanctioned applications, making it difficult to maintain confidentiality and data integrity, and often breaching compliance requirements by being stored in insecure or unmonitored places.

- Policy enforcement gaps: Traditional security tools, designed for centralized systems, struggled to detect and manage this scattered SaaS ecosystem. Without real-time insight, IT teams had no effective mechanism to monitor, govern, or remediate these newly introduced risks.

The lessons learned from these early days of Shadow IT resonate strongly as organizations now contend with the rise of Shadow AI. The same dynamics—innovation outpacing policy, user-driven adoption outside IT’s purview, and overlooked data governance—are at play, but the stakes continue to grow as technology evolves.

Parallels to Shadow AI

Just as SaaS did before it, AI has entered the workplace faster than policies can keep pace. Employees across departments—from marketing and HR to software engineering—are leveraging AI to save time and spark creativity.

Unfortunately, many of these tools are operating in the shadows.

A recent study revealed that 56% of security teams admit to using unapproved AI tools, while only 32% of organizations have formal AI controls in place. That means more than half of enterprises are already running AI experiments without oversight—creating the same conditions that once made Shadow IT a cybersecurity nightmare.

The Modern Shadow AI Scenario

Imagine this:

A marketing coordinator pastes sensitive client data into ChatGPT for faster content creation. A developer uses Copilot to write code without realizing that snippets may include licensed IP. Or an HR recruiter relies on an AI résumé screener without testing for bias or fairness.

Each of these actions bypasses official governance—and introduces risk at scale.

The Key Similarities Between Shadow IT & Shadow AI

Why the Stakes Are Higher This Time

Shadow IT was a data governance problem. Shadow AI has expanded this challenge, creating both a data and decision-making governance problem.

Unlike legacy cloud tools, AI systems introduce layers of complexity that go far beyond data storage and access. These solutions don’t just process information—they create new content, drive real-time recommendations, and deeply shape business outcomes. This new paradigm exposes organizations to heightened ethical, legal, and operational risks that traditional technology governance never had to address.

1. Data Retention & Intellectual Property

When employees submit sensitive prompts or proprietary information into generative AI systems, that input can be retained, stored, and potentially even used to further train and refine public or third-party models. This means that valuable code, confidential client data, and internal communications could inadvertently become part of a vendor’s dataset, presenting not only the threat of a data leak but also the risk of unintentionally transferring intellectual property to external parties. The implications stretch beyond confidentiality—this scenario can result in a loss of ownership and legal control over valuable assets.

2. Model Bias & Reputational Risk

While Shadow IT’s risk centered on unauthorized data movement, Shadow AI’s greater threat is the introduction of unmonitored and potentially biased algorithms into business processes. If employees or teams deploy unvetted AI tools for critical decisions—such as recruitment, lending, or customer service—organizations become exposed to the risk of perpetuating or amplifying bias. This exposes companies to potential claims of discrimination, regulatory scrutiny, and substantial reputational damage. The consequence isn’t limited to operational mishaps but can reverberate across stakeholder trust and public perception.

3. Escalating Regulatory Pressure

The regulatory landscape around AI is rapidly evolving. With frameworks such as the EU AI Act, NIST’s AI Risk Management Framework, and the proposed U.S. AI Bill of Rights, there’s a global push to define clear standards for AI transparency, accountability, and governance. Enterprises will soon be required to maintain verifiable, auditable controls around their AI systems—much like the compliance rigor that emerged after the introduction of GDPR. Failure to establish robust AI governance now will not only elevate risk exposure but may also create substantial compliance debt, with penalties that could surpass those seen in the early days of modern data privacy regulation.

The stakes have never been higher. Without decisive action, Shadow AI threatens to drive new governance failures at a speed and scale that far exceeds those of the cloud adoption era.

Preventing Repeat Mistakes

Learning from the history of Shadow IT means avoiding the same missteps. Fortunately, organizations today have a proven blueprint for success: prioritize comprehensive visibility into technology usage, implement robust governance frameworks, and foster a culture of security-first thinking at every level. By investing in specialized tools to uncover hidden adoption, establishing clear policies that define acceptable use, and delivering consistent education that empowers employees, businesses can proactively identify and mitigate risks—transforming lessons from the past into a foundation for secure and responsible innovation with AI.

1. Visibility: Discover What You Can’t See

You can’t secure what you don’t know exists. To protect your organization against Shadow AI, it’s essential to implement AI visibility tools—modern successors to Cloud Access Security Brokers (CASBs), which were once used to uncover Shadow IT. These platforms provide critical insight into where and how AI is being adopted across your business, surfacing even the tools that users haven’t disclosed to IT.

Industry-leading AI visibility solutions include:

- Nightfall AI and Netskope for real-time monitoring and detection of sensitive data flowing into generative AI environments, including usage tracking and anomaly detection.

- Microsoft Purview for discovering, classifying, and governing data as it moves through AI pipelines, ensuring compliance with evolving regulatory frameworks and safeguarding intellectual property.

- BetterCloud for automated enforcement of AI-specific policies, including permission management, automated alerting on unsanctioned use, and policy remediation across cloud and AI ecosystems.

- Power BI or Tableau for continuous risk reporting, aggregating AI activity data into actionable dashboards that visualize risk exposure, usage trends, and compliance metrics.

Together, these platforms deliver an “AI activity map”—a dynamic visual representation of your organization’s AI footprint. This map details which departments and individuals are utilizing AI tools, the nature of data being processed, the workflows involved, and how information moves across both sanctioned and unsanctioned applications. By giving security and IT leaders centralized visibility, these tools enable organizations to quickly identify blind spots, assess risk, and establish meaningful controls that keep innovation secure and compliant.

2. Governance: Set Clear Boundaries & Approval Paths

Every enterprise should establish a Shadow AI governance framework modeled after Shadow IT playbooks:

-

Require vendor risk assessments for any external AI service.

-

Define acceptable data input types (e.g., no PII, PHI, or IP).

-

Mandate prompt-logging policies and review processes.

-

Publish an internal AI policy guide accessible to all employees.

Governance transforms “AI curiosity” into structured innovation.

3. Culture: Educate, Empower, & Encourage Compliance

Technology policies succeed only when people understand them. Organizations should focus on awareness and empowerment, not punishment.

Train employees on responsible AI use, including:

-

How AI models learn from input data.

-

What constitutes sensitive or proprietary content?

-

Why “shadow experimentation” creates risk for everyone.

Transparency fosters trust—and reduces the allure of going rogue.

Case Study Perspective: Shadow AI in Industry

Different sectors face different Shadow AI pressures:

- Healthcare: Clinicians using unapproved AI diagnostic tools risk HIPAA violations. These tools may process patient data outside secure environments, creating the potential for data leakage and breaches of protected health information. Beyond regulatory fines, such incidents can erode patient trust and invite heightened scrutiny from compliance bodies.

- Financial Services: Analysts experimenting with AI trading algorithms risk exposure of market data. When sensitive financial models or proprietary trading strategies are shared with unvetted AI platforms, confidential market positions can be leaked. This not only jeopardizes competitive advantage but also exposes institutions to regulatory action and reputational harm.

- Manufacturing: Engineers using AI for predictive maintenance may upload proprietary sensor data. If this operational data is transferred into public AI models, unique manufacturing processes or insights into equipment performance can be unintentionally disclosed. The result is increased vulnerability to industrial espionage and loss of intellectual property, with potential downstream impacts on supply chain security.

Each example echoes the early days of cloud adoption—where productivity gains masked unseen vulnerabilities. While the drive to innovate is universal, the unintended consequences of Shadow AI can ripple through compliance, operational security, and trade secret protection if proactive policies and controls are not established.

The Role of Cyber Advisors

Cyber Advisors has seen this cycle before. We’ve guided hundreds of organizations—from SMBs to enterprise leaders—through the transition from Shadow IT chaos to secure, compliant cloud governance.

Today, our teams are helping those same organizations confront Shadow AI.

Through services like vCISO advisory, Continuous Threat Exposure Management (CTEM), and AI Governance Framework Development, we help clients:

-

Identify hidden AI usage across teams.

-

Develop governance frameworks that align with your organization's security policies.

-

Reduce Shadow AI risk exposure through policy, training, and visibility tools.

-

Create scalable adoption protocols for future AI integration.

Our goal: to ensure innovation and compliance evolve together, not in conflict.

Conclusion: Avoiding the Next Shadow Crisis

The story of Shadow IT teaches a clear lesson—what begins as convenience can quickly become chaos. Shadow AI represents the next chapter in that same book.

History doesn’t have to repeat itself. Organizations that act now—establishing visibility, defining governance, and educating employees—can transform Shadow AI from a liability into a strength.

If your business is unsure where to start, Cyber Advisors can help.

Contact us today to discuss your organization’s AI governance readiness and build a clear, secure, and ethical roadmap for AI adoption. Together, we can ensure your company’s innovation stays in the light—not the shadows.